What is Airflow?

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. Airflow’s extensible Python framework enables you to build workflows connecting with virtually any technology. A web interface helps manage the state of your workflows. Airflow is deployable in many ways, varying from a single process on your laptop to a distributed setup to support even the biggest workflows.

Workflows as code

The main characteristic of Airflow workflows is that all workflows are defined in Python code. “Workflows as code” serves several purposes:

- Dynamic: Airflow pipelines are configured as Python code, allowing for dynamic pipeline generation.

- Extensible: The Airflow framework contains operators to connect with numerous technologies. All Airflow components are extensible to easily adjust to your environment.

- Flexible: Workflow parameterization is built-in leveraging the Jinja templating engine.

Why Airflow?

Airflow is a batch workflow orchestration platform. The Airflow framework contains operators to connect with many technologies and is easily extensible to connect with a new technology. If your workflows have a clear start and end, and run at regular intervals, they can be programmed as an Airflow DAG.

If you prefer coding over clicking, Airflow is the tool for you. Workflows are defined as Python code which means:

- Workflows can be stored in version control so that you can roll back to previous versions

- Workflows can be developed by multiple people simultaneously

- Tests can be written to validate functionality

- Components are extensible and you can build on a wide collection of existing components

Rich scheduling and execution semantics enable you to easily define complex pipelines, running at regular intervals. Backfilling allows you to (re-)run pipelines on historical data after making changes to your logic. And the ability to rerun partial pipelines after resolving an error helps maximize efficiency.

Airflow’s user interface provides both in-depth views of pipelines and individual tasks, and an overview of pipelines over time. From the interface, you can inspect logs and manage tasks, for example retrying a task in case of failure.

The open-source nature of Airflow ensures you work on components developed, tested, and used by many other companies around the world. In the active community you can find plenty of helpful resources in the form of blogs posts, articles, conferences, books, and more. You can connect with other peers via several channels such as Slack and mailing lists.

UI/Screenshots

As a Airflow developer, you should consider the Why, What and How of product use.

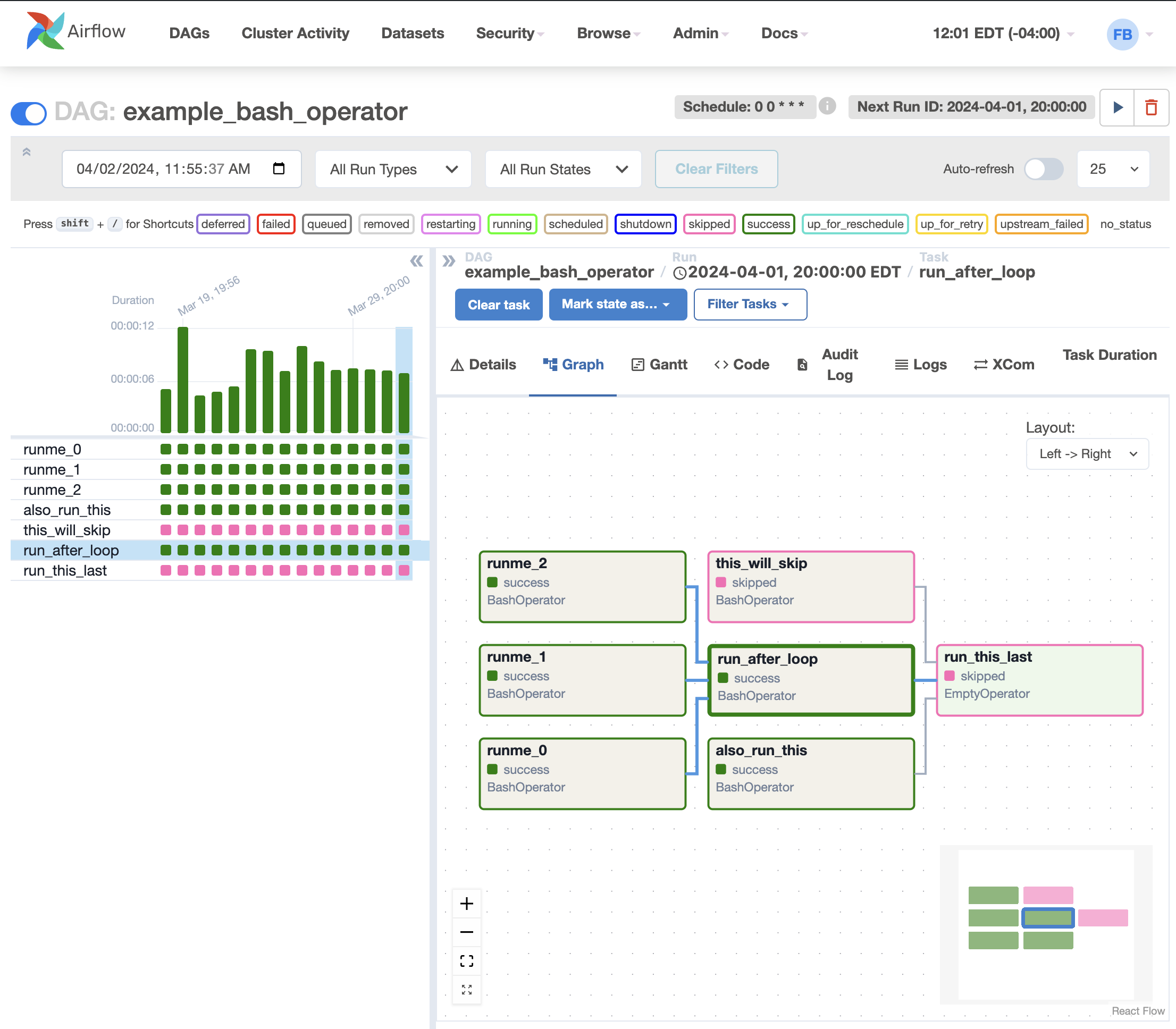

Graph View

The graph view is perhaps the most comprehensive. Visualize your DAG’s dependencies and their current status for a specific run.

Graph View

Designing in Airflow

Integrations

A Design System is a set of interconnected patterns and shared practices coherently organized to aid in digital product design and development of products such as apps or websites.

Learning resources

First Warsaw Airflow Meetup

Apache Airflow YouTube Channel - Official YouTube Channel

Airflow Summit - Online conference for Apache Airflow developers

Awesome Apache Airflow - Curated list of resources about Apache Airflow

The Complete Hands-On Introduction to Apache Airflow by Marc Lamberti on Udemy

Apache Airflow: Complete Hands-On Beginner to Advanced Class by Alexandra Abbas on Udemy